School of Psychology - Georgia Institute of Technology

SWAN is a project of the Psychology Department's Sonification

Lab at Georgia Institute of Technology, overseen by Professor Bruce

Walker, in partnership with Professor Frank

Dellaert of the College of Computing. We have additional collaboration

from the GT Biomedical Information Technology Center, the Atlanta VA, and the Atlanta Center for the Visually Impaired (CVI).

SWAN is a project of the Psychology Department's Sonification

Lab at Georgia Institute of Technology, overseen by Professor Bruce

Walker, in partnership with Professor Frank

Dellaert of the College of Computing. We have additional collaboration

from the GT Biomedical Information Technology Center, the Atlanta VA, and the Atlanta Center for the Visually Impaired (CVI).

Background

There is a continuing need for a portable, practical, and highly functional

navigation aid for people with vision loss. This includes temporary loss,

such as firefighters in a smoke-filled building, and long term or permanent

blindness. In either case, the user needs to move from place to place, avoid

obstacles, and learn the details of the environment.

There is a continuing need for a portable, practical, and highly functional

navigation aid for people with vision loss. This includes temporary loss,

such as firefighters in a smoke-filled building, and long term or permanent

blindness. In either case, the user needs to move from place to place, avoid

obstacles, and learn the details of the environment.

SWAN Architecture

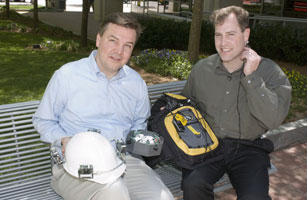

The core system is a small computer--either a lightweight laptop or an even

smaller handheld device--with a variety of location and orientation tracking

technologies including, among others, GPS, inertial sensors, pedometer, RFID

tags, RF sensors, compass, and others. Sophisticated sensor fusion is used

to determine the best estimate of the user's location and which way she is

facing. See the SWAN architecture figure <PDF>

<PNG image> for more details

of the components. You can also find out more about the bone conduction headphones,

or "bonephones" we use to present the audio interface/sounds to

the user, on our Bonephones Research page.

Once the user's location and heading is determined, SWAN uses an audio-only

interface (basically, a series of non-speech sounds called "beacons")

to guide the listener along a path, while at the same time indicating the

location of other important features in the environments (see below). SWAN

includes sounds for the following purposes:

Once the user's location and heading is determined, SWAN uses an audio-only

interface (basically, a series of non-speech sounds called "beacons")

to guide the listener along a path, while at the same time indicating the

location of other important features in the environments (see below). SWAN

includes sounds for the following purposes:

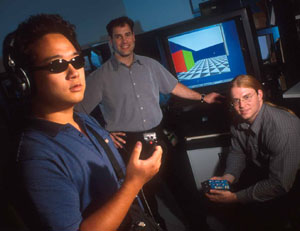

To develop the auditory display, we have also built a VR test environment in which we can try out new audio features, study usability, learnability, and develop training and practice regimens.The picture to the right shows some of the VR test system in use, and we have a brief video showing a user navigating a short path, with "sonar pulse" beacons. <QuickTime> (3MB)

Publications and Media Resources

Walker, B. N., & Lindsay, J. (2006). Navigation performance with a virtual auditory display: Effects of beacon sound, capture radius, and practice. Human Factors, 48(2), 265-278. <PDF>

Walker, B. N., & Lindsay, J. (2005). Using virtual reality to prototype auditory navigation displays. Assistive Technology Journal 17(1), 72-81. <PDF>

Oh, S. M., Tariq, S., Walker, B. N., & Dellaert, F. (2004). Map-based Priors for Localization. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2004), Sendai, Japan (28 Sept - 02 Oct). <PDF>

Powerpoint Presentations and Diagrams:

SWAN Architecture Diagram <PDF> <PNG image>

SWAN System Powerpoint Presentation (Brief: <PDF>) (Long: <PDF> (2MB))

Other Media and Videos:

Discovery Channel Canada, Daily Planet Show, January 29, 2007. Wiring up blind people to help them find their way. Complete WMV file (21 MB)

SWAN Video Segment from CNN "Pioneers" series <Windows Media> (5MB)

SWAN Audio VR Test System Demo Video <QuickTime> (3MB)

Map-based Navigation Video. <QuickTime MOV>

A top-down map view of a user moving along a path on campus. White areas are sidewalks, gray areas are grass, and black areas are buildings. The grayscale value represents the probability that the user is actually in that spot. Thus, the white sidewalks are highly likely, and the black buildings are highly unlikely (assuming an outdoor path). The system takes this map information into account in determining location, along with GPS measurements. The red path shows the raw GPS readings. The green path shows the "corrected" or "smoothed" location estimate produced by the SWAN system. The blue dots show show the particles that are used in a particle filter; the centroid of the particles is used as the estimate of the location, and leads to the green path. Note that even when the raw GPS location is off the path, the green "corrected" path remains on the path. The diamonds in the video indicate the location of waypoints in the path. The SWAN audio system places a virtual audio beacon at the waypoint's location, so the user simply walks toward the apparent source of the sound. When the user reaches the waypoint, the beacon sound moves to "come from" the next waypoint. As the user approaches the edge of the map, the system automatically jumps to the next map tile, keeping the user roughly in the center of the map. The ping sound is the beacon; it is not spatialized in this demo. Note that the tempo increases as the user approaches the waypoint. The chime indicates the user has reached the waypoint, and the beacon sound will move to the next waypoint.

Press Coverage of the SWAN Project:

The SWAN System has been covered by several media outlets, ranging from CNN to the Guardian, to Technology Review, UPI, and many others. Please see out PressCoverage page for links to several of those stories, as well as other Sonification Lab coverage.

Acknowledgments

This  material is based upon work supported by the National Science Foundation under Grant No. IIS-0534332 awarded to Bruce Walker and Frank Dellaert. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. The research has also been supported by a GVU Seed Grant to support a graduate student in the GT College of Computing.

material is based upon work supported by the National Science Foundation under Grant No. IIS-0534332 awarded to Bruce Walker and Frank Dellaert. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. The research has also been supported by a GVU Seed Grant to support a graduate student in the GT College of Computing.

Contact: