- Research Overview

The Sonification Lab is actively involved in many research projects, usually involving interdisciplinary teams from all over Georgia Tech, and often from all over the world. Some

of the more recent and major ones are listed below. Some projects have separate

web sites for more information, and most projects have items in the Publications

list. For information about getting involved in our research (paid, volunteer, credit, collaborator), please see our Opportunities page, where there is a list of personnel needs for the projects. Alternatively you can simply contact us for more details.

- Research "Pillars"

The current core research activities in the Sonification Lab generally seek to study and apply auditory and multimodal user interfaces in one of four main topic domains, or "pllars":

In addition,we have many other projects in:

...to top

- Formal Education Pillar

How can we use sonification, auditory displays, and sound in general to help make formal eduction (i.e., material taught in schools) more effective, more engaging, and more universally accessible? There are many issues to tackle, including perception, cognition, training, technology, policy, and accessibility of the technology and the information to all learners. Our current focus is on science, technology, engineering, and math (so-called "STEM"), as well as statistics.

- ALADDIN: Making Education Materials More Accessible

Higher education teaching materials too often have inaccessible charts, graphs, videos, pictures,

diagrams, maps, simulations, etc. Georgia Tech's AccessCORPS has

created an AI-supported human-in-the-loop tool, Automated

LLM-Assisted Document Descriptions for INclusion (ALADDIN) to

streamline image classification and generation of alt text.

[project details...]

- Auditory Graph Design and Context Cues

Creating a (visual) graph without axes,

tick marks, or labels

will generally earn you an 'F' in highschool math class. After all, it is

just a squiggle on a page without the added context that those things

provide. Auditory graphs require the same elements, so we are studying how

best to create them, introduce them into an auditory graph, and examine

how people learn to use them for better (auditory) graph comprehension.

tick marks, or labels

will generally earn you an 'F' in highschool math class. After all, it is

just a squiggle on a page without the added context that those things

provide. Auditory graphs require the same elements, so we are studying how

best to create them, introduce them into an auditory graph, and examine

how people learn to use them for better (auditory) graph comprehension.

[project details...]

- Individual Differences and Training in Auditory Displays

Every person hears things slightly differently.

How can

we determine, in advance, what these differences will make (if any) in the

perception and comprehension of auditory graphs and sonification? What are

the characteristics of the listener that predict performance? We are studying

a range of factors, including perception, cognition, and listening experience.

One major factor we are considering is whether a listener is sighted or

blind, and if blind, at what age blindness occurred.

How can

we determine, in advance, what these differences will make (if any) in the

perception and comprehension of auditory graphs and sonification? What are

the characteristics of the listener that predict performance? We are studying

a range of factors, including perception, cognition, and listening experience.

One major factor we are considering is whether a listener is sighted or

blind, and if blind, at what age blindness occurred.

Sonifications and auditory graphs are relatively new,

so few people have experience with them. As a result, there is a great need

to understand how to train listeners to use and interpret auditory displays.

We are looking at training types, including simple exposure, part-task training,

whole-task training, and others, to determine what works best, and under

what circumstances.

so few people have experience with them. As a result, there is a great need

to understand how to train listeners to use and interpret auditory displays.

We are looking at training types, including simple exposure, part-task training,

whole-task training, and others, to determine what works best, and under

what circumstances.

[project details...]

...to top

- Informal Learning Environments Pillar

Much learning takes place outside of schools, in informal learning environments (ILEs) such as museums, science centers, aquaria, and other field trip sites. Auditory displays and sonification, along with music and other sounds can play a major role in increasing successful participation in these informal learning places. We need to understand what participation, learning, and entertainment mean, and how we can implement multimodal technology to effectively support all visitors, including those who may have disabilities or special needs.

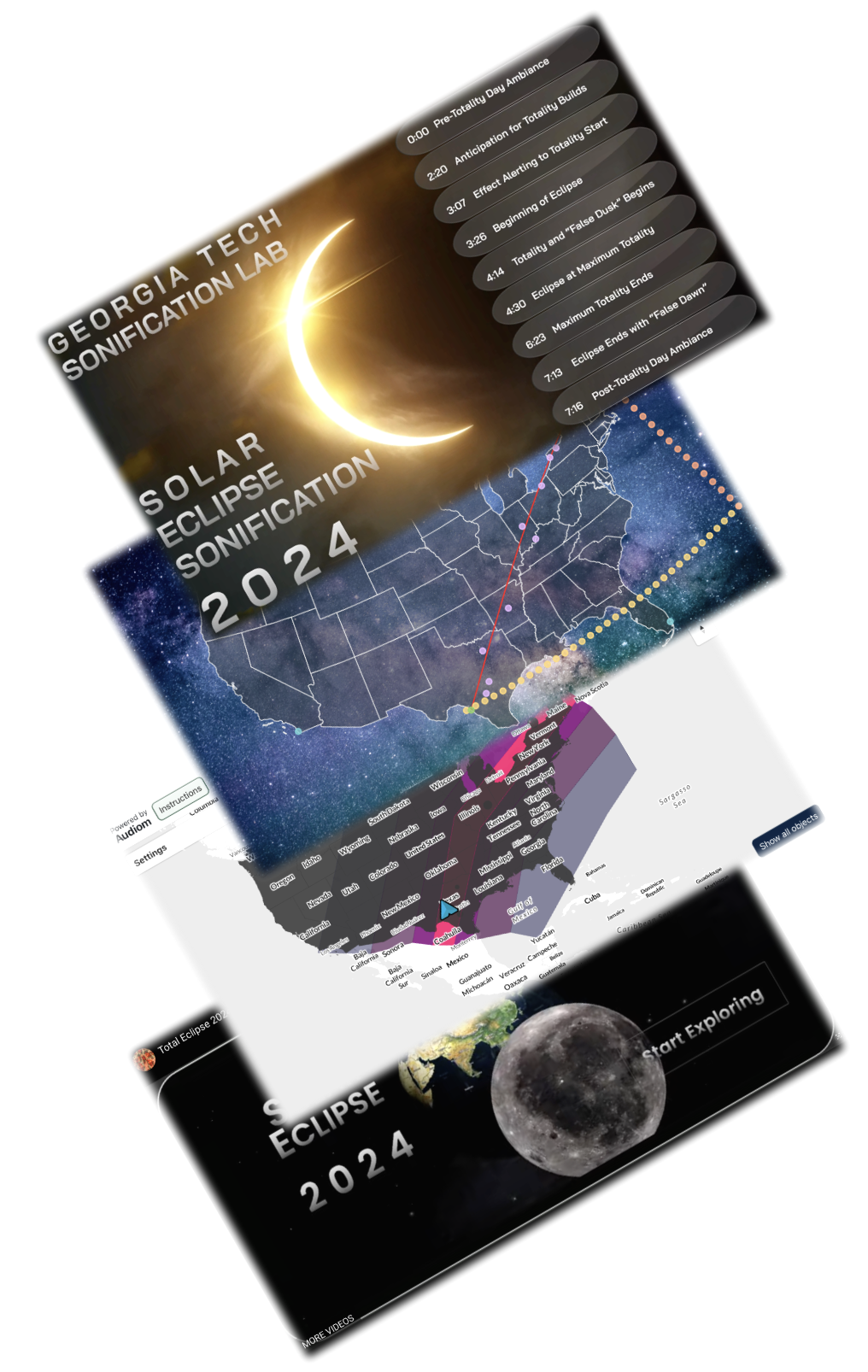

- Solar Eclipse 2024 Resources: Accessible Maps, VR App, Soundtrack

We have developed several fantastic resources

to help educate and entertain you about the 2024 Total Solar Eclipse that is passing over North America on April 8, 2024.

There are two accessible maps (one "push", one "pull" style) that convey the path of totality, and the regions of partial totality. Since they are multimodal, they are designed to be accessible even to screen reader users.

There is an immersive VR experience in which you can view the eclipse from the location of Rochester NY, wander around the town as the light levels fade, animal behaviors change, and environmental sounds evolve...and learn about the eclipse in the VR environment. The VR experience is available as either an immersive APK for Quest headsets, or as an iPhone app that you can install from the App Store.

We have a YouTub video that allows you to preview the VR experience.

We also have the stand-alone soundtrack available for your enjoyment, either as a WAV file or in the form of a YouTube video.

to help educate and entertain you about the 2024 Total Solar Eclipse that is passing over North America on April 8, 2024.

There are two accessible maps (one "push", one "pull" style) that convey the path of totality, and the regions of partial totality. Since they are multimodal, they are designed to be accessible even to screen reader users.

There is an immersive VR experience in which you can view the eclipse from the location of Rochester NY, wander around the town as the light levels fade, animal behaviors change, and environmental sounds evolve...and learn about the eclipse in the VR environment. The VR experience is available as either an immersive APK for Quest headsets, or as an iPhone app that you can install from the App Store.

We have a YouTub video that allows you to preview the VR experience.

We also have the stand-alone soundtrack available for your enjoyment, either as a WAV file or in the form of a YouTube video.

[project details...]

- Solar Eclipse 2017 Sonification

We have developed a soundtrack

and sonification of the 2017 Solar Eclipse, to help persons with vision loss (and anyone, really!) experience the eclipse. The soundtrack is composed of three sound layers: the pre-composed base track, which conveys the temporal dynamics; additional sounds that represent the "false dusk" and "false dawn"; and a layer of musical sounds that are generated live, in realtime, based on meteorological data.

and sonification of the 2017 Solar Eclipse, to help persons with vision loss (and anyone, really!) experience the eclipse. The soundtrack is composed of three sound layers: the pre-composed base track, which conveys the temporal dynamics; additional sounds that represent the "false dusk" and "false dawn"; and a layer of musical sounds that are generated live, in realtime, based on meteorological data.

[project details...]

- The Accessible Aquarium Project

Museums, science centers,

zoos and aquaria are faced with

educating and entertaining an increasingly diverse visitor population with

varying physical and sensory needs. There are very few guidelines to help

these facilities develop non-visual exhibit information, especially for

dynamic exhibits. In an effort to make such informal learning environments

(ILEs) more accessible to visually impaired visitors, the Georgia Tech Accessible

Aquarium Project is studying auditory display and sonification methods for

use in exhibit interpretation.

zoos and aquaria are faced with

educating and entertaining an increasingly diverse visitor population with

varying physical and sensory needs. There are very few guidelines to help

these facilities develop non-visual exhibit information, especially for

dynamic exhibits. In an effort to make such informal learning environments

(ILEs) more accessible to visually impaired visitors, the Georgia Tech Accessible

Aquarium Project is studying auditory display and sonification methods for

use in exhibit interpretation.

[project details...]

...to top

- Electronic Devices Pillar

Many electronic devices, ranging from computers to mobile phones, to home appliances, can be more usable, more effective, more entertaining, and much more universally accessible when they have an appropriate and well-designed multimodal interface. Often, this means complementing or enhancing an existing visual display with an auditory display. In some cases, a purely auditory interface is appropriate.

- Advanced Auditory Menus

A common and practical

application of sound in the human-computer

interface is the auditory menu. Generally, menu items are spoken via text-to-speech

(TTS), and the user simply navigates to the menu item of interest, and presses

a button to enter that menu or execute the menu command. We are studying

advances to this paradigm, including new interaction techniques, in desktop interfaces, mobile devices, in-vehicle interfaces, among others. Enhancement techniques include, for example, "spearcons", "spindex", and auditory scrollbars. Check out our

Auditory Menus Tech Report, or our many other publications and software.

application of sound in the human-computer

interface is the auditory menu. Generally, menu items are spoken via text-to-speech

(TTS), and the user simply navigates to the menu item of interest, and presses

a button to enter that menu or execute the menu command. We are studying

advances to this paradigm, including new interaction techniques, in desktop interfaces, mobile devices, in-vehicle interfaces, among others. Enhancement techniques include, for example, "spearcons", "spindex", and auditory scrollbars. Check out our

Auditory Menus Tech Report, or our many other publications and software.

[project details...]

- Bone Conduction Headsets ("bonephones")

For many real-world applications

that use auditory displays, covering the ears of the

user is not acceptable (e.g., pedestrians amongst traffic; firefighters

who need to communicate). We are studying the use of bone conduction technology

as a method of transmitting auditory information to a listener, without

covering the ears. Our research ranges from basic psychophysics (minimum

hearing thresholds, frequency response, etc.), all the way up to 3D audio

via bonephones (yes, if can be done!).

that use auditory displays, covering the ears of the

user is not acceptable (e.g., pedestrians amongst traffic; firefighters

who need to communicate). We are studying the use of bone conduction technology

as a method of transmitting auditory information to a listener, without

covering the ears. Our research ranges from basic psychophysics (minimum

hearing thresholds, frequency response, etc.), all the way up to 3D audio

via bonephones (yes, if can be done!).

[project details...]

...to top

- Driving and In-Vehicle Technologies Pillar

Driving is a very challenging task, with considerable visual attention being required at all times. Modern vehicles include many secondary tasks ranging from entertainment (e.g., radio or MP3 players) to navigation (e.g., GPS systems), and beyond (e.g., electronic communications, social media). While it is generally safest to do no secondary tasks while driving, carefully designed auditory user interfaces can allow safer completion of secondary tasks. Further, multimodal displays can serve as assistive technologies to help novice, tired, or angry drivers perform better, as well as helping drivers with special challenges, such as those who have had a traumatic brain injury or other disability. See GT Sonification Lab Driving Research web page.

- In-Vehicle Assistive Technology (IVAT) Project

The IVAT Project is focusing on helping drivers

who have perceptual, cognitive, attention, decision-making, and emotion regulation challenges, often the result of traumatic brain injury (TBI). Carefully designed software solutions, running on hardware that is integrated into the vehicle's data streams, are proving to be very effective in helping these individuals be better, safer, more indepedent drivers. We are leveraging our experience in multimodal user interfaces of all types, and utilizing the many facilities available to us, including the GT School of Psychology's Driving Research Facility.

who have perceptual, cognitive, attention, decision-making, and emotion regulation challenges, often the result of traumatic brain injury (TBI). Carefully designed software solutions, running on hardware that is integrated into the vehicle's data streams, are proving to be very effective in helping these individuals be better, safer, more indepedent drivers. We are leveraging our experience in multimodal user interfaces of all types, and utilizing the many facilities available to us, including the GT School of Psychology's Driving Research Facility.

[project details...]

...to top

- Other Sonification Research & Applications

This research seeks to discover the optimal data-to-display mappings for

use in scientific sonification and investigate whether these optimal mappings

vary within and/or across fields of application.

- BUZZ: Audio User Experience (audioUX) Scale

We have developed a scale composed of 11 questions

that can efficiently and effectively evaluate the salient aspects of an auditory user interface, or the audio aspects of a multimodal user interface. Can be used in conjunction with SUS, UMUX, UMUX-Lite, NASA TLX, etc.

that can efficiently and effectively evaluate the salient aspects of an auditory user interface, or the audio aspects of a multimodal user interface. Can be used in conjunction with SUS, UMUX, UMUX-Lite, NASA TLX, etc.

[project details...]

- Solar Eclipse 2017 Sonification

We have developed a soundtrack

and sonification of the 2017 Solar Eclipse, to help persons with vision loss (and anyone, really!) experience the eclipse. The soundtrack is composed of three sound layers: the pre-composed base track, which conveys the temporal dynamics; additional sounds that represent the "false dusk" and "false dawn"; and a layer of musical sounds that are generated live, in realtime, based on meteorological data.

and sonification of the 2017 Solar Eclipse, to help persons with vision loss (and anyone, really!) experience the eclipse. The soundtrack is composed of three sound layers: the pre-composed base track, which conveys the temporal dynamics; additional sounds that represent the "false dusk" and "false dawn"; and a layer of musical sounds that are generated live, in realtime, based on meteorological data.

[project details...]

- SWAN: System for Wearable Audio Navigation

SWAN is an audio-only

wayfinding and navigation system to help those who cannot see (temporarily

or permanently) to navigate and learn about their environment. It uses a combination of spatialized speech and non-speech sounds to lead a user along a path to a destination, while presenting sounds indicating the location of environmental features such as benches, shops, buildings, rooms, emergency exits, etc. It is useful as an assistive technology for people with functional vision loss, and also as a tool for firefighters, first responders, or other tactical personnel.

wayfinding and navigation system to help those who cannot see (temporarily

or permanently) to navigate and learn about their environment. It uses a combination of spatialized speech and non-speech sounds to lead a user along a path to a destination, while presenting sounds indicating the location of environmental features such as benches, shops, buildings, rooms, emergency exits, etc. It is useful as an assistive technology for people with functional vision loss, and also as a tool for firefighters, first responders, or other tactical personnel.

[project details...]

- Sonification Studio (new as of Dec 2021)

Simple yet powerful

ONLINE software package for creating data sonifications

and both visual and auditory graphs. Built in collaboration with Highcharts, makers of popular and powerful online charting tools...and as a replacement for the aging (and no longer supported) Sonification Sandbox.

Includes the ability to create or import data (inlcuding from Google Sheets), map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound, chart, or data files. Written in JavaScript, leveraging web audio and other online tools.

ONLINE software package for creating data sonifications

and both visual and auditory graphs. Built in collaboration with Highcharts, makers of popular and powerful online charting tools...and as a replacement for the aging (and no longer supported) Sonification Sandbox.

Includes the ability to create or import data (inlcuding from Google Sheets), map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound, chart, or data files. Written in JavaScript, leveraging web audio and other online tools.

[project details & link to online app...]

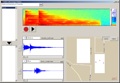

- Sonification Sandbox (not actively developed or supported)

Simple yet powerful

software package for creating data sonifications

and auditory graphs. Includes the ability to import data, map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound file. Written in cross-platform

Java/JavaSound. (No longer under development, and no longer supported. See the online replacement Sonification Studio.)

software package for creating data sonifications

and auditory graphs. Includes the ability to import data, map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound file. Written in cross-platform

Java/JavaSound. (No longer under development, and no longer supported. See the online replacement Sonification Studio.)

[project details & download...]

- Audio Abacus

Innovative method

to display specific data values, like

the exact price of a stock or the precise temperature. Standalone application,

or plugin for the Sonification Sandbox. Written in cross-platform Java/JavaSound.

to display specific data values, like

the exact price of a stock or the precise temperature. Standalone application,

or plugin for the Sonification Sandbox. Written in cross-platform Java/JavaSound.

[details & download...]

- SoundScapes: Ecological Peripheral

Auditory Displays

We use natural sounds

such as birdcalls, insect songs, rain,

and thunder to create an immersive soundscape to sonify continuous

data such as the stock market index. This approach leads to a display that

can be easily distinguished from the background when necessary, but can

also be allowed to fade out of attention, and not be tiring or "intrusive"

when not desired.

such as birdcalls, insect songs, rain,

and thunder to create an immersive soundscape to sonify continuous

data such as the stock market index. This approach leads to a display that

can be easily distinguished from the background when necessary, but can

also be allowed to fade out of attention, and not be tiring or "intrusive"

when not desired.

[project details...]

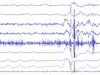

- Brainwave Sonification

Turning EEG data into

3D spatialized audio in order to study

source location, type, and timing for neural events. The multichannel EEG data are turned into multichannel audio, via direct audification, or via a transformation into a sonification.

3D spatialized audio in order to study

source location, type, and timing for neural events. The multichannel EEG data are turned into multichannel audio, via direct audification, or via a transformation into a sonification.

- Mad Monkey

MAD Monkey

is software which allows a designer to prototype a spatialized audio environment, and contains much of the functionality that a full-fledged design environment should have.

MAD Monkey is written in MATLAB. Any computer with MATLAB installed should be able to run the application.

is software which allows a designer to prototype a spatialized audio environment, and contains much of the functionality that a full-fledged design environment should have.

MAD Monkey is written in MATLAB. Any computer with MATLAB installed should be able to run the application.

[project details & download...]

...to top

- Human Computer Interaction Research

While we have projects underway in all aspects of HCI, we focus primarily

on non-traditional interfaces. This means pretty much anything other

than the usual WIMP (Windows, Icons, Menus, & Pointers) type of interface.

Everything from novel hardware interfaces, challenging usage environments,

uncommon or highly specific user needs, to new an unexplored task domains.

Examples range from submarine control & display, space station tasks, interfaces

for persons with visual impairments, medical and military tactical interfaces,

multimodal and non-visual interfaces. In addition to the projects listed above...

- Human Factors and Medical Technology

With the increase of technology

in the practice of medicine,

we have begun to study both the individual display components (both auditory

and visual),a nd how the technology is deployed. The MedTech Project is

a collaborative effort to examine how the physical arrangement of technology

(e.g., a desktop computer in a doctor's examination room) affects the quality,

or perceived quality, of patient care.

in the practice of medicine,

we have begun to study both the individual display components (both auditory

and visual),a nd how the technology is deployed. The MedTech Project is

a collaborative effort to examine how the physical arrangement of technology

(e.g., a desktop computer in a doctor's examination room) affects the quality,

or perceived quality, of patient care.

- Eye Tracking and HCI

Modern interface design

can be informed by the results

of eye tacking studies, in addition to the more traditional reaction time

and accuracy studies. We are applying eye tracking techniques to examine

interaction with, for example, dialog boxes and other widgets.

can be informed by the results

of eye tacking studies, in addition to the more traditional reaction time

and accuracy studies. We are applying eye tracking techniques to examine

interaction with, for example, dialog boxes and other widgets.

...to top

- Accessible Games and Sports

The GT Sonification Lab is studying how to make games and sports more accessible to people with disabilities. This includes being a spectator, as well as an active participant! We are partners in the Games @ Georgia Tech Initiative.

We have several Accessible Games and Sports projects underway, such as the Accessible Fantasy Sports project, Aquarium Fugue, Audio Lemonade Stand, STEM Education Games, MIDI Mercury, and more.

[games and sports project webpage...]

...to top

ONLINE software package for creating data sonifications

and both visual and auditory graphs. Built in collaboration with Highcharts, makers of popular and powerful online charting tools...and as a replacement for the aging (and no longer supported) Sonification Sandbox.

Includes the ability to create or import data (inlcuding from Google Sheets), map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound, chart, or data files. Written in JavaScript, leveraging web audio and other online tools.

ONLINE software package for creating data sonifications

and both visual and auditory graphs. Built in collaboration with Highcharts, makers of popular and powerful online charting tools...and as a replacement for the aging (and no longer supported) Sonification Sandbox.

Includes the ability to create or import data (inlcuding from Google Sheets), map data to sound

parameters in multiple flexible ways, add contextual sounds like click tracks

and notifications, and save the resulting sound, chart, or data files. Written in JavaScript, leveraging web audio and other online tools.